|

uThreads

0.3.0

uThreads: Concurrent User Threads in C++

|

uThreads is a concurrent library based on cooperative scheduling of user-level threads(fibers) implemented in C++. User-level threads are lightweight threads that execute on top of kernel threads to provide concurrency as well as parallelism. Kernel threads are necessary to utilize processors, but they come with the following drawbacks:

Cooperative user-level threads, on the other hand, provide light weight context switches and omit the additional overhead of preemption and kernel scheduling. Most Operating Systems only support a 1:1 thread mapping (1 user-level thread to 1 kernel-level thread), where multiple kernel threads execute at the same time to utilize multiple cores and provide parallelism. e.g., Linux supports only 1:1 thread mapping. There is also N:1 thread mapping, where multiple user-level threads can be mapped to a single kernel-level thread. The kernel thread is not aware of the user-level threads existence. For example, Facebook's folly::fiber, libmill, and libtask use N:1 mapping. Having N:1 mapping means if the application blocks at the kernel level, all user-level threads are blocked and application cannot move forward. One way to address this is to only block on user level, hence, blocking user-level threads. This setting works very well with IO bound applications, however, if a user thread requires using a CPU for a while, it can block other user threads and the task is better to be executed asynchronously on another core to prevent this from happening. In order to avoid this problem, user threads can be mapped to multiple kernel-level threads. Thus, creating the third scenario with M:N or hybrid mapping. e.g., go and uC++ use M:N mapping.

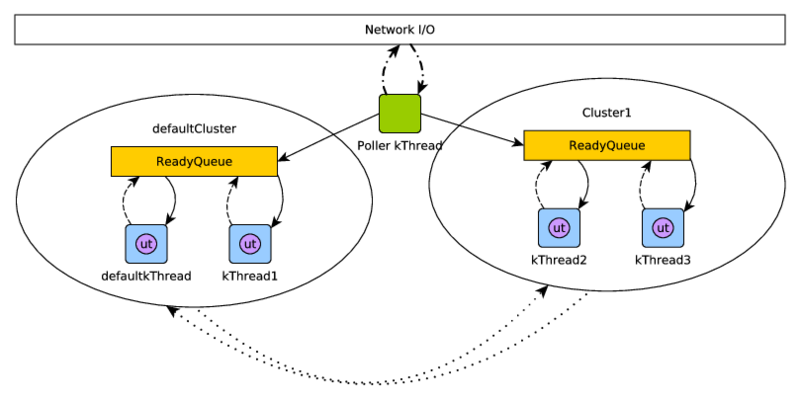

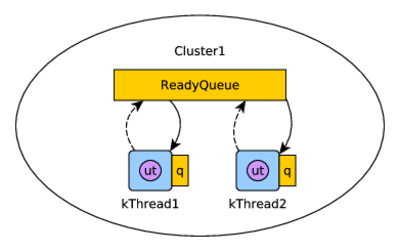

uThreads supports M:N mapping of uThreads (user-level threads) over kThreads (kernel-level threads) with cooperative scheduling. kThreads can be grouped together by Clusters, and uThreads can migrate among Clusters. Figure 1 shows the structure of an application implemented using uThreads using a single ReadyQueue Scheduler. You can find the documentation here http://samanbarghi.github.io/uThreads.

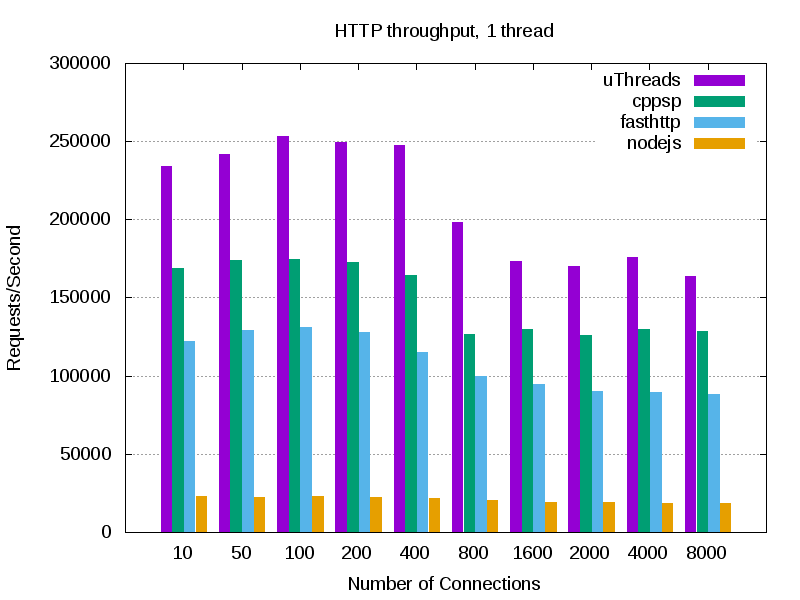

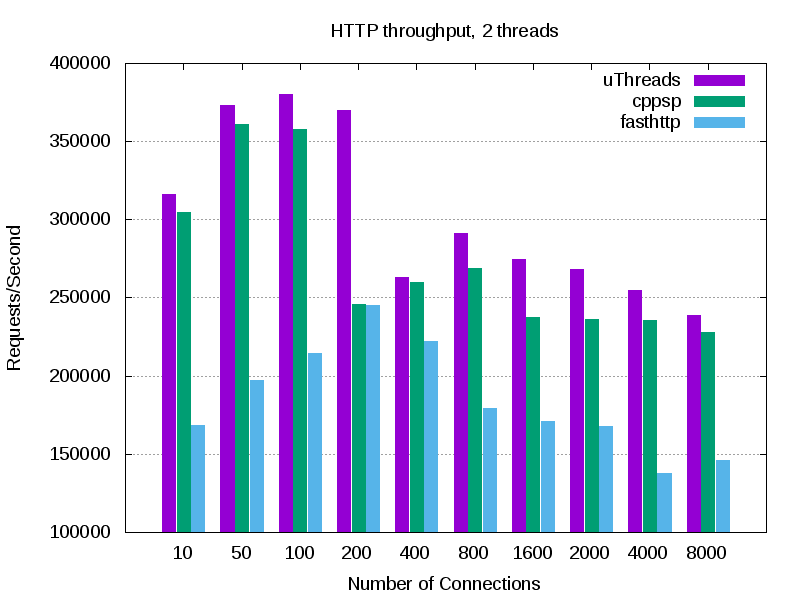

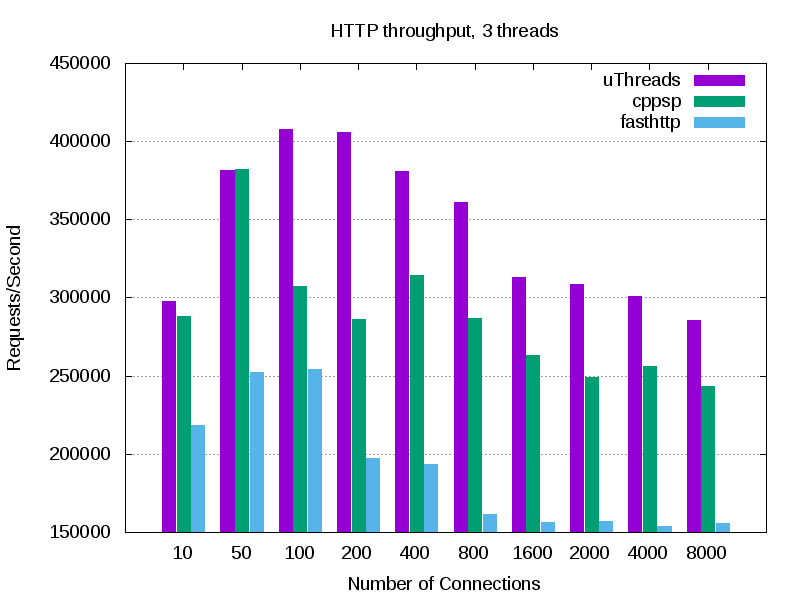

Here are a comparsion of a simple webserver throughput with fasthttp, cppsp, and nodejs. Experiments are executed on my laptop (i7 quad core). Note that not much optimization is applied to any of the applications, thus there might me some space to squeeze more throughput out of these applications. You can check the source code of the sample webserver under the test directory. All servers return a "hello world" response, and the client (in this case wrk) throws a huge number of concurrent and pipelined requests at each server. This experiment shows the overhead of each framework since the response is kept very small (similar to TechEmpower "plaintext" benchmark").

Currently uThreads only supports Linux on x86_64 platforms. It also depends on the following:

To build and install the library issue the following commands:

Currently, everything is installed under /usr/local/lib and /usr/local/include/uThreads. To change this you need to edit the Makefile and change the DEST_DIR.

There are sample applications under test directory, to compile them issue make test, and you can find the binaries under bin directory. Refer to the documentation for more information.

This section explains the internals of uThreads.

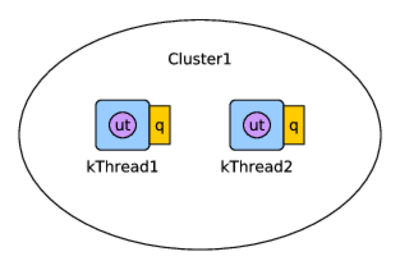

Clusters are used to group kThreads together. Each Cluster can contain one or more kThreads, but each kThread only belongs to a single Cluster. Each Cluster includes a Scheduler which is used to schedule uThreads over kThreads in that Cluster. Application programmer decides how many kThreads belong to a Cluster by assigning them upon creation. Clusters can be used to execute different tasks over separate kThreads and if pinned properly, over separate cores. For exampl,e they can be used to provide better CPU cache locality for different set of tasks, by executing them on specific cores.

kThreads are kernel-level threads (std::thread), that are the main vehicle to utilize cores and execute the program. Each kThread can interact with the local scheduler in the Cluster and execute the uThreads provided by the local Scheduler, but it can move uThreads to another Cluster in the application. The former can happen when uThreads yield or block at user level, and the latter happens when uThreads migrate to another Cluster. Migration let the uThread continue execution on a different set of kThreads based on the requirements of the code.

uThreads are the main building blocks of the library. They are either sitting in a readyQueue or runQueue waiting to be picked by a kThread, running by a kThread, or blocked and waiting for an event to occur. uThreads are being scheduled cooperatively over Clusters, they can either yield, migrate or block on an event to let other uThreads utilized the same kThread they are being executed over.

Each application has at least one Cluster, one kThread and one uThread. Each C++ application has at least one thread of execution (kernel thread) which runs the main() function. A C++ application that is linked with uThreads library, upon execution, creates a defaultCluster, a wrapper around the main execution thread and call it defaultkThread, and also a uThread called mainUT to take over the defaultkThread stack and run the main function.

In addition, there is a single Poller kThread which is responsible for polling the network devices, and multiplexing network events over the Cluster. uThreads provides a user-level blocking on network events, where network calls are non-blocking at the kernel-level but uThreads block on network events if the device is not ready. The poller thread is thus responsible for unblocking the uThreads upon receiving the related network event. The poller thread is using edge triggered epoll in Linux, and which is similar to how Golang supports multiplexing of network events.

Currently, uThreads only supports fixed stack sizes for performance purposes. uThread's stack is cached after finishing execution to avoid the extra overhead of memory allocation.

As Explained earlier, each Cluster has a local Scheduler which is responsible for distributing uThreads among the kThreads whithin that Cluster. Currently, there are 4 different schedulers, which will be explained below. These schedulers do not support any work sharing or work stealing at the moment and based on the type of the Scheduler either uThreads are assigned in a round robin manner, or kThreads ask the Scheduler for more uThreads when they run out of work. The type of the scheduler is determined at compile time by defining SCHEDULERNO and pass the related scheduler number. The default scheduler is Scheduler #2, with local instrusive Multiple-Producer-Single-Consumer Queues per kThread (since it provides better performance and scalability).

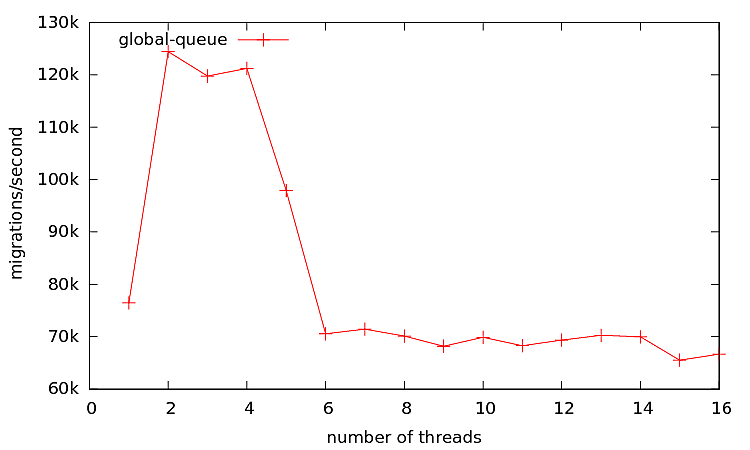

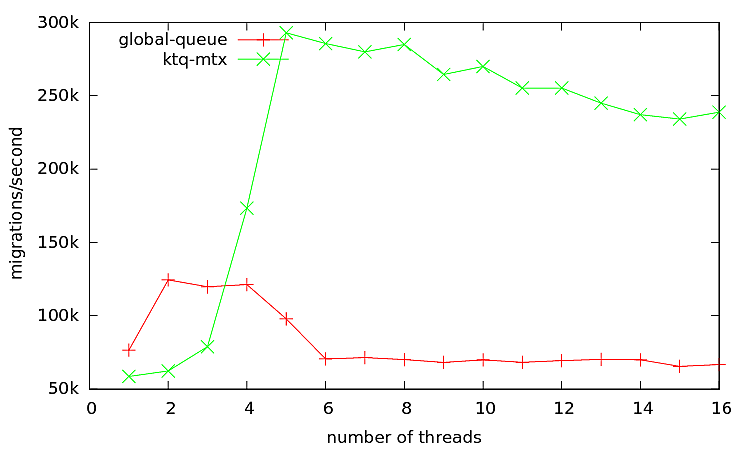

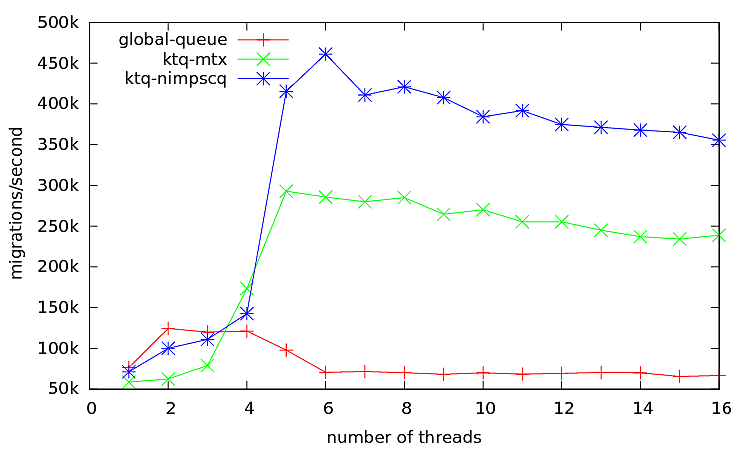

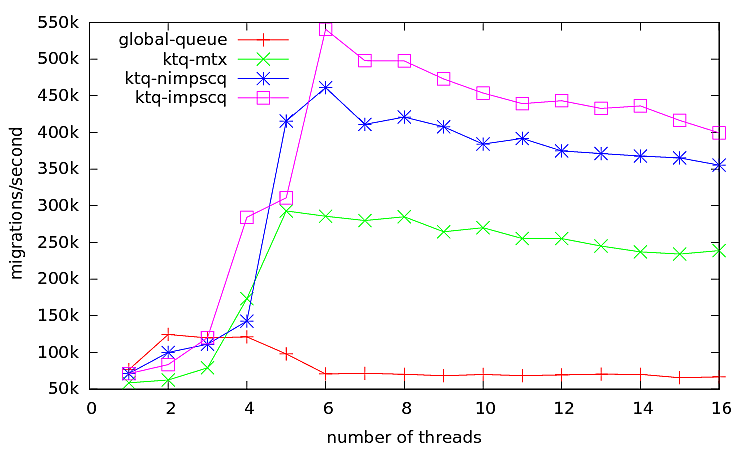

Figure 3 shows result for the first Scheduler:

Based on the results, this approach is not very scalable past 4 kThreads per Cluster.

For lower number of threads the global queue seems to do better, but as the number increases the intrusive lock-free MPSC Queue is a better Choice. The default scheduler for uThreads is Scheduler #2 (intrusive MPSCQ), which can be changed at compile time by definding SCHEDULERNO and set it to the appropriate scheduler.

You can add your own scheduler by looking at the source code under src/runtime/schedulers, and provide a scheduler number and a Scheduler class in its own header. Documentation for adding a new scheduler will be added as soon as the code base reaches a stable state.

uThreads can be joinable, which means upon creation the creator uThread can block until they finish execution. There are three ways to execute a piece of code on another Cluster (These can be used to execute tasks asynchronously on current Cluster or a remote one):

uThreads also provides user-level blocking synchronization and mutex primitives. It has basic Mutex, Condition Variable and Semaphore. You can find examples of their usage under test directory.

You can find various examples under the test directory in the github repo. There is an EchoClient and EchoServer implemented using uThreads.

There is also a simple webserver to test uThreads functionality.

For performance comparisons, memached code has been updated to use uThreads instead of event loops (except the thread that accepts connections), where tasks are assigned to uThreads instead of using the underlying event library. The code can be found here.

This work made possible through invaluable helps and advices I received from Martin Karsten.

You can find the source code here: https://github.com/samanbarghi/uThreads.

1.8.11

1.8.11